How to Turn Slack Messages into Pull Requests with Cursor and Linear

Welcome to Pulling Levers - behind-the-scenes lessons, hard-won insights, and unexpected learnings from building Lleverage.

Welcome to Pulling Levers — behind-the-scenes lessons, hard-won insights, and unexpected learnings from building Lleverage.ai.

Linear's recent introduction of Cursor agents has unlocked something remarkable: a fully automated workflow from client bug report to deployable preview branch, all without opening your code editor. Read more about the integration here.

Clients and team members naturally brain dump valuable insights into Slack - detailed bug reproductions, feature ideas, edge cases they've discovered. Traditionally, someone had to manually transform these stream-of-consciousness messages into tickets, prioritize them, assign them, and eventually get around to implementation. Now, a simple 🎫 emoji creates a Linear ticket, and if it's straightforward enough, our Cursor agent picks it up and delivers a PR with a preview branch within an hour.

This isn't theoretical - we're running this in production. The integration has transformed how we handle routine fixes and features, freeing our engineers to focus on more interesting and creative work.

The Full Automation Pipeline

Here's the complete flow that makes this possible:

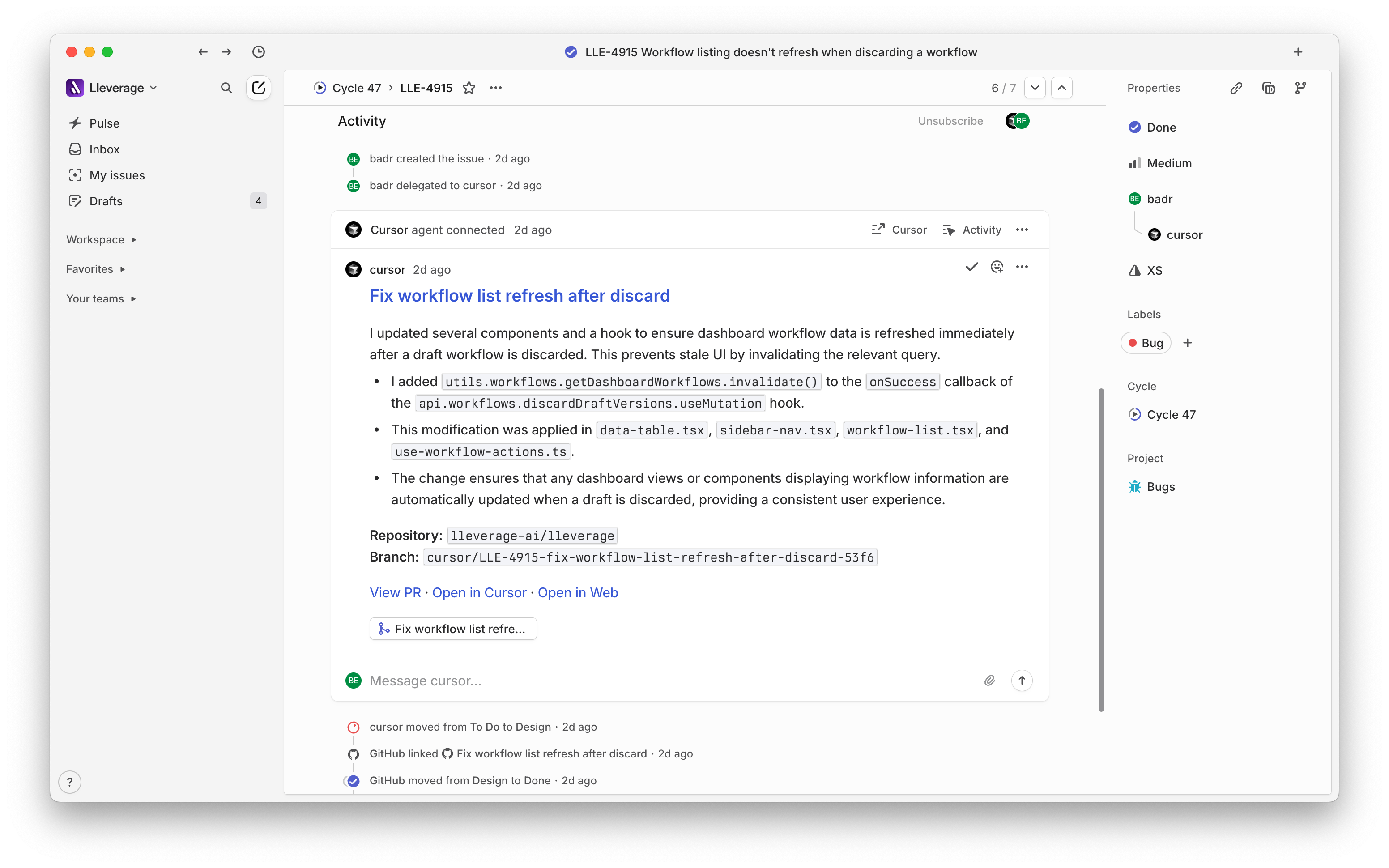

Bug report in Slack → React with 🎫 (Linear Asks) → Triage & assign to @cursor → Agent implements & creates PR → Run tests and create ephemeral preview branch → AI-assisted review (PR-Agent) → Human validation → Merge to main

In many cases, this entire flow happens without a developer opening their code editor. The bug gets fixed while they're working on more complex features. When developers need to take over, there's a button in Linear to open the agent's changes in their local Cursor IDE, complete with the full chat history.

Building the Context Hierarchy

We run a monorepo because unified context is everything when working with AI agents. But this requires a planned approach to guiding agents through the combined complexity of our code. We use .cursor/rules/ directories with MDC files to create what I call a "context hierarchy" (see how Cursor rules work):

The Constitution - Our top-level .cursor/rules/base.mdc with core guidelines (snippet):

---

alwaysApply: true

description: Core development guidelines

---

# Critical Rules

- Always search the codebase to make sure functionality doesn't already exist

- Keep it simple stupid - do not over complicate the solution

- In TypeScript, prefer `type` over `interface`

# PNPM Commands

pnpm test:integration --filter @repo/workflow-engine

# Integration tests: Always run from root (requires env variables)

Local Governance - Distributed MDC files using globs to target specific areas. /api has rules for request validation, /components has design system standards, etc.

Continuous Refinement - Since our entire team uses Cursor as their IDE, we continuously improve these rules. When someone notices the agent using a faulty pattern, they can simply use the /Generate Cursor Rules command to create new rules or enhance existing ones. This transforms recurring issues into documented patterns that guide all future development.

Progressive Rule Building

It's fine to put comprehensive documentation across your codebase, but steer agent behavior progressively. Start small and add direction where you see problems:

- Too many tool calls to grasp the codebase → Add architectural context

- Consistent mistakes → Add preventive rules to steer behaviour

For example, the agent kept running tests from nested directories instead of the monorepo root. A simple rule fixed it: "Always run integration tests from root with env variables via dotenv." This saves the agent from rediscovering this every session.

Writing Effective Specifications

When our CEO asked "can't we write specs with AI too?" it highlighted where human thinking is critical. Whether the Cursor agent works autonomously from Linear or a developer pulls in an issue through Linear's MCP server, adding direction and specifics to the Linear ticket makes all the difference when the coding agent is activated.

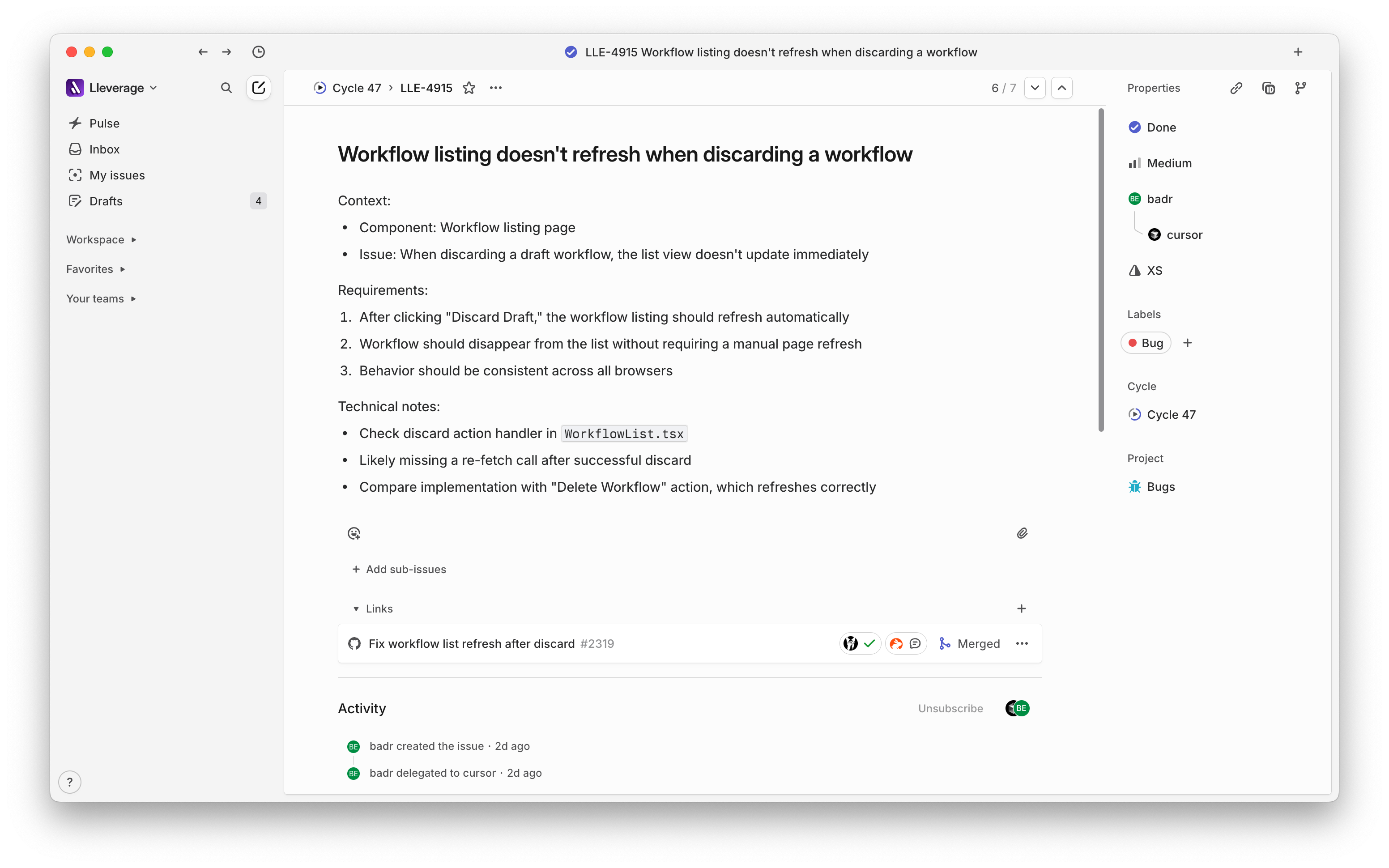

Treat the AI like a brilliant but literal junior developer. Here's how we structure tickets in Linear:

Specificity is a superpower. Clear specs lead to accurate implementation.

When to Use Your AI Teammate

Perfect for AI:

- Well-specced simple frontend features

- Easy to reproduce bugs

- Simple CRUD endpoints

- Writing additional tests

- Documentation updates

Keep for Humans:

- Architecture decisions

- Complex business logic

- Performance optimization

- User experience decisions

The pattern is clear: well-defined tasks with existing patterns work great. Strategic decisions need human judgment.

The Compound Effect

Forcing ourselves to write clear rules and specifications for AI has made us better engineers overall. Good documentation helps everyone - the AI agent, new team members, and ourselves when context-switching. These optimizations aren't just for automated agents; they improve our day-to-day development work.

The rules force us to articulate unconscious decisions. Why do we structure components that way? Now it's documented. The Cursor rules becomes institutional memory that never takes vacation.

PR-Agent adds another layer - unlike alternatives like CodeRabbit that can overdo it, PR-Agent focuses on discovering real issues and, more importantly, making PRs consumable with good documentation of the changes. This speeds up the review proces for AI-written code, but also for more complex PR's written by humans. Combined with ephemeral preview branches, we get fast technical and functional validation before merging.

Where This Is Heading

The investment in building this workflow has paid off in unexpected ways. What started as an experiment in AI automation has changed how we approach development. Bugs reported in Slack get fixed while we're in deep focus on complex features. Our documentation is sharper because it has to be. Our patterns are more consistent because inconsistency breaks automation.

Linear and Cursor go together like peanut butter and jelly, the workflow feels natural, almost inevitable. And these tools are only at the beginning of their evolution. If they already let us ship fixes without opening a code editor, imagine what comes next.

The key insight remains: treat AI like a junior developer, brilliant at execution with clear direction, but not yet capable of the judgment calls that come from experience. Start small, build your rules progressively, and watch your newest teammate improve every day. In the end, teaching your AI well doesn't just make it better, it makes your whole team better.

.jpeg)